“This is the most interesting data integration problem imaginable,” says Alon Halevy, a former computer science professor at the University of Washington who is now leading a team at Google that is trying to solve the Deep Web conundrum. That approach may sound straightforward in theory, but in practice the vast variety of database structures and possible search terms poses a thorny computational challenge.

With millions of databases connected to the Web, and endless possible permutations of search terms, there is simply no way for any search engine - no matter how powerful - to sift through every possible combination of data on the fly. Rajaraman said, “but what we’re trying to do is help you explore the haystack.” “Most search engines try to help you find a needle in a haystack,” Mr.

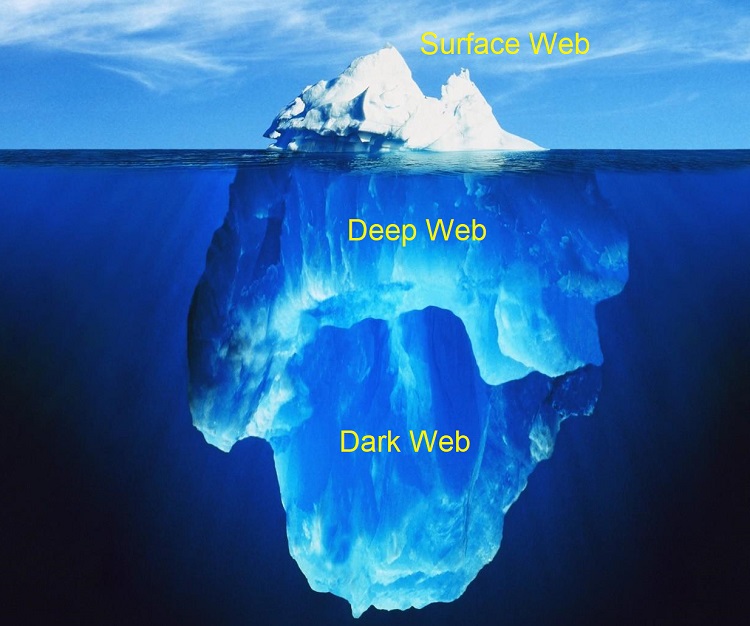

#Deep web iceberg software

Kosmix has developed software that matches searches with the databases most likely to yield relevant information, then returns an overview of the topic drawn from multiple sources. “The crawlable Web is the tip of the iceberg,” says Anand Rajaraman, co-founder of Kosmix (a Deep Web search start-up whose investors include Jeffrey P. While that approach works well for the pages that make up the surface Web, these programs have a harder time penetrating databases that are set up to respond to typed queries. There is even a program called SecureDrop that is integrated with Tor services and offers anonymity for those eager to publish sensitive information.Search engines rely on programs known as crawlers (or spiders) that gather information by following the trails of hyperlinks that tie the Web together. It is the case of WikiLeaks that has found this area of the web an opportunity to reveal certain information. Here, information that would endanger those who publish it if the source were found can be shared anonymously. It is true that criminals take advantage of this hidden network to carry on their activity. The general concept is that the Dark Web does not gather exactly the right things. Search engine crawlers have prohibited access hereĪnd cannot discover new information. Sites or internal databases that are very massive and most often containĬonfidential information. Nowadays the Deep Web containsĭatabases from companies and state institutions.

Represents a gateway to the Deep Web appeared. Was made public and two years later, the service that works today and which It appeard under the concept of onion routing in 1995 when the US Army tryed toĬommunicate with those abroad, without being detected. The unseen part of the internet is called the Deep Web and In sight here and sites can be accessed through search engines. Sources, video sites, etc.) is called Surface Web or Clear Web. The Internet that regular users access (blogs, portals, news The Internet is like an iceberg that floats and leaves only 10% of Most of the Internet remains hidden because it is so Manage to discover only a very small part of what is called the Internet.īasically, through searches, we only scratch the surface of the Internet. But the search engines have a limit, because they Search engines are the main source for discovering new sites

0 kommentar(er)

0 kommentar(er)